Computers operate using a binary system, which means they represent information using only two digits: 0 and 1. Every piece of data, from text to images to programs, is ultimately broken down into a sequence of bits. As every piece of information are represented in bits, we need a method to represent words using some meningful numbers. The simplest way to represent words in computer is using a one-hot-encoding. But, those kind of representation does not posses any valueable information about the language. As example, we can multiply two one-hot-vectorized words which produce 0 as result. To resolve this issuce a more discrete representation of words are developed, known as word2vec. The original study for word2vec has been proposed by (Mikolov et al., 2013a; Mikolov et al., 2013b) at google.

In this article I’m going to describe how word2vec architechture works and alongside we are going to develop a python implementation of it. At the end of this article some useful resources and applications of the model has been depicted.

Data Processing

Collect Dataset

For simplicity, we are going to use a book corpus titled “Alice in a Wonderland” (available at gutenbert.org). We are going to use a single chapter from the whole text. In the pre-processing step, all the texts are tokenized using a word tokenizer. Now, we have list of distinct words as dictionary, and list of sentence corpus. Our sentence corpus stored in alice.txt which contains list of sentences separated by new line.

# OPEN DATASET

import requests

with open("alice.txt", "r") as file:

dataset = file.read().split("\n")

First 5 sentences from the dataset are shown below

>>> print(dataset[:5])

['Alice was beginning to get very tired of sitting by her sister on the bank',

'and of having nothing to do',

'once or twice she had peeped into the book her sister was reading',

'but it had no pictures or conversations in it',

'and what is the use of a book']

After reading the entire corpus, we use nltk.tokenize.word_tokenize to tokenize the sentences into list of words, and we store them into a set. We called it a dictionary.

# CREATING DICTIONARY

import nltk

nltk.download("punkt") # punkt package required

from nltk.tokenize import word_tokenize

dictionary = set() # set of distinct tokens

for sent in dataset:

dictionary.update(word_tokenize(sent.lower()))

Showing first 10 set of words in the dictionary below

>>> print(list(dictionary)[:10])

['me', 'hoping', 'finger', 'drink', 'were', 'children', 'turned', 'which', 'bats', 'ever']

Data Pre-Processing

In the preprocessing stage, we split the entire corpus into list of sentences. After that, all the puhnchuation marks from the sentence has been removed.

# DOWNLOAD DATASET FROM WEB

import requests

def get_data(url):

return requests.get(url).text

# REMOVE PUNCHUATION

import re

def remove_punc(sentence):

return re.sub(r'[^\w\d\s]+', '', sentence)

# GET DICTIONARY AND SENTENCES

def get_data(url):

response = requests.get(url).text

dictionary, sentences = set(), list()

dataset = " ".join(response.split("\n")) # to remove unwanted \n

dataset = re.sub('[.!?]', "\n", dataset) # split at .!?

for sent in dataset.split("\n"):

sentence = remove_punc(sent.strip()).lower()

dictionary.update(sentence.split(" "))

sentences.append(sentence)

return sentences, list(dictionary)

# DRIVING CODE

url = "https://norvig.com/ngrams/smaller.txt"

sentences, dictionary = get_data(url)

Word Lookup Table

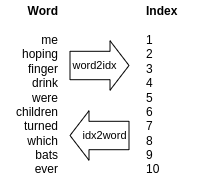

After tokenizing all words (i.e. list of all unique tokens), we need to convert the words to index and index to word lookup table, so that we can indicate a particular word by it’s index and vice versa, respectively. This practice is needed in operations such as one-hot-encoding.

Word to Index and Index to Word conversion

Word to Index and Index to Word conversion

The below code snippet is used to generate the lookup tables

# LOOKUP TABLE

def get_lookup_tables(tokens):

word2idx = dict(zip(tokens, list(range(len(tokens)))))

idx2word = dict(zip(list(range(len(tokens))), tokens))

return word2idx, idx2word

# GET TABLES

word2idx, idx2word = get_lookup_tables(dictionary)

Now, we can use word2idx to generate index for a unique word and idx2word to get word by its index. Below test snippet shows index generation for a single word “plainly” and vice versa

>>> print(word2idx["plainly"])

45

>>> print(idx2word[45])

plainly

Supporting Libraries

Softmax

Word2vec use a popular activation function in its output-layer known as Softmax. This function is used to convert a set of numbers in between [0,1]. The sum of softmax output value is 1. The Softmax function numerically defined as below

Python implementation of the above equation is given below

# SOFTMAX

def softmax(nums):

denominator = np.sum(np.exp(nums))

return np.exp(nums)/denominator

Below code snippet shows the working of Softmax function on a random set of numbers containing [1,2,3,4]

>>> softmax([1,2,3,4])

array([0.0320586 , 0.08714432, 0.23688282, 0.64391426])

>>> sum(softmax([1,2,3,4])) == 1

True

To know more about Softmax function, follow youtube lecture titled “Softmax Function Explained In Depth with 3D Visuals” made by Elliot Waite.

Random Matrix Generation

In word2vec, each words assigned as a specific dimension vectors. Initially those vectors are assigned randomly with very small weight. In upcoming sections, we will study what are those vectors that we need to consider. To get random vectors with dimension \(d\), below function is developed

# MATRIX INITIALIZATION

def matrix_initialization(d):

return np.random.rand(d,1) * 0.01

Here, 0.01 is multiplied with the matridx in order to make the random weights very small (i.e. instead of assigning to 0, we define weights as very small values).

One Hot Encoding

The simplest way of representing a word is using One-hot-encoding, which is a vector of length

\(|V|\)

(here V is the vocabulary) in which every index has the numerical value 0 except the index of the target word in the vocabulary. Every words from a sequence of the input sentence converted into its equivalalnt one-hot-embbedded vectors and treated as input vector. Even though one-hot vectors are the input of any NN model, it does not reflect any useful information about the language models. As example, if we multiply two One-hot embedded vectors, they simply produce 0 as result vector. The syntactic representation using python shown below

# ONE HOT EMBEDDED VECTOR CREATION

def one_hot_embedding(word):

res = np.zeros((len(v),))

res[word2idx(word)] = 1

retuurn res

Model Definition

Word to vector model can be defined in mainly two ways: (i) Continuous Bag of Words (CBOW): where we try to estimate the middle word based on surrounding context words. (ii) Skip Gram: which predicts words within a certain range before and after the current word in the same sentence (tensorflow.org, 2024). Among this two approaches, any one can be used to vectorize the tokens. In this article we mostly focus on Skip Gram approach.

By training methods, word2vec can trained using various ways (CS224N, 2017), some of which are: (i) Hierarical Softmax, (ii) Negative Sampling, (iii) Naive Softmax, etc. In the next few section, Skip Gram model with Hierarical Softmax has been described.

Target and Context Generation

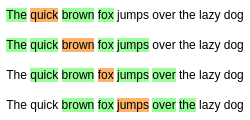

Now, given input sequence, we need to determine our target and context tokens. For this purpose we are going to define a fixed window of size L, in which we define center word as target word and L tokens from left and right are going to act as a context words. The below example shows the intuition

Target and context word in window L

Target and context word in window L

Here, the central word denote by the color orange and an window of size +-2 (i.e. 2 from the left and 2 from the right) defined as context words by the color green. Below code snippet used to extract all the target and context pairs from the input sequence

# GENERATE TARGET CONTEXT PAIRS

def get_pairs(sent, target, window):

windowL = max(0, target-window)

windowR = min(len(sent)-(target+window)-1, len(sent))

temp = sent[windowL:target] + sent[target:windowR]

return list(zip())

After defninig the word and context pairs, all the tuples are extracted from the input sequence. For the second pass in the above image, when target word is brown, the (target, context) paris are: {(brown, the), (brown, quick), (brown, fox), (brown, jumps)}; Our objective is to maximize the probability

\(P(w|c_i)\)

where \(w\) is the target word and \(c_i\) are the context words.

Here each of the tokens defined by a fixed dimension \(d\) (For example, In our case we assume \(d\) as 300). Those fixed dimension are always helpful when we calculating for very large corpus.

Similarity Calculation

Forward Propagation

Loss Calculaion

Backword Propagation

Training

Evaluation & Testing

GloVe

Applications

Music Recommendation System

Sentiment Analysis

Named Entitiy Recognition

Transformer Representation

References

- Word2vec from scratch [jaketae.github.io]

- Using Word2vec for Music Recommendations [towardsdatascience.com]

- word2vec: by tensorflow tutorial [tensorflow.org]

Thankyou for considering this article. This article is solily based on open resources. In case you want to update the post, fell free to raise an issue (For farther information visit contribution.md). Any contribution to this article are always welcomed.